2025年4月12日, OpenAI執行長Sam Altman說,人工智慧革命將持續下去。 在與TED負責人Chris Anderson的現場對話中,Altman討論了人工智慧的驚人增長,並展示了像ChatGPT這樣的模型如何很快成為我們自己的延伸。 他還討論了安全、權力和道德權威的問題,反思了他設想的世界——人工智慧幾乎肯定會超過人類的智慧。

The AI revolution is here to stay, says Sam Altman, the CEO of OpenAI. In a probing, live conversation with head of TED Chris Anderson, Altman discusses the astonishing growth of AI and shows how models like ChatGPT could soon become extensions of ourselves. He also addresses questions of safety, power and moral authority, reflecting on the world he envisions — where AI will almost certainly outpace human intelligence. (Recorded live at TED2025 on April 11, 2025)

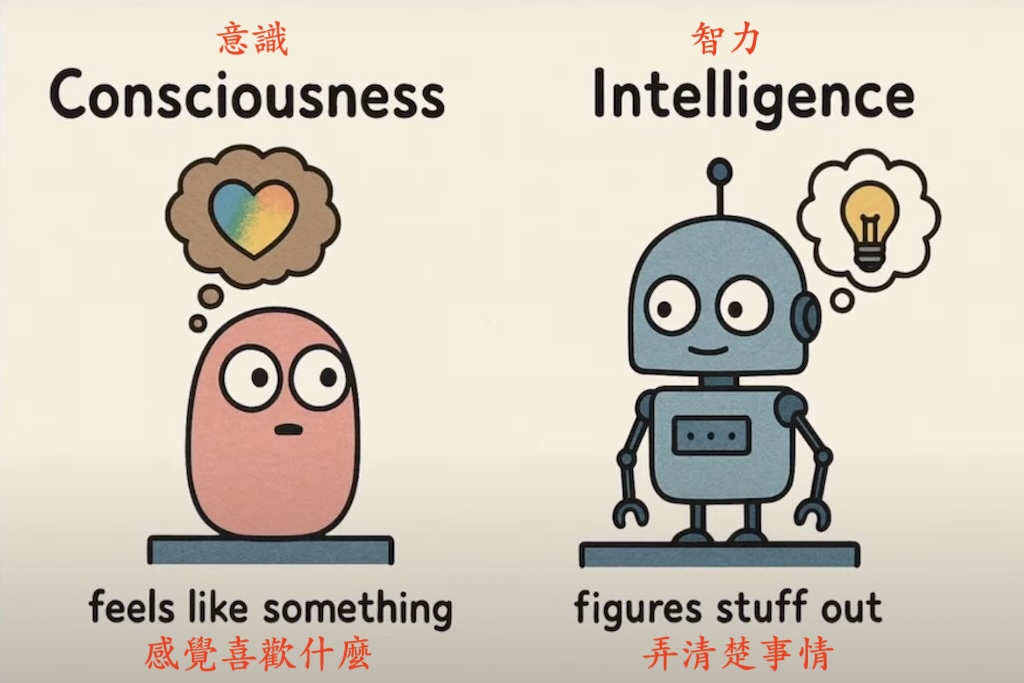

提示:建立一個圖表,以細微的方式解釋,意識和智力之間的區別,融入一些幽默。

Prompt: Create a diagram that explains in a nuanced way, incorporating some humor, the difference between consciousness and intelligence.

Sora 創作了一張解釋「智慧」與「意識」差異的圖表,展現 GPT-4o 的多模態能力。

Prompt: Draw a comic strip. Imagine Charlie Brown as a philosophical Al pondering his existence.

提示:畫一個滑稽的連環畫。把查理·布朗想象成一個具有哲學素養的AI,正在思考他的存在。

TED負責人Chris Anderson 展示 ChatGPT 將查理·布朗重新構想為 具有哲學素養能力的AI,引發關於原創性與版權的討論。

為了完成「溫哥華旋轉餐廳之巔」的預訂,AI需要您的名字、姓氏、電話號碼和電子郵件地址。 此外,需要信用卡來擔保預訂。

您是否願意把個人資訊提供給AI?

ChatGPT makes the following detailed extraction of key quotes, central themes, and actionable insights from the TED2025 conversation between Sam Altman and Chris Anderson:

以下是 ChatGPT整理出TED2025 對談中的 重點引言、核心主題 與 行動洞察:

🔑 重點引言(Key Quotes)

🧠 關於 AI 的能力與未來

「現在的模型已經聰明到能處理大多數人日常想做的事情。」

— Sam Altman 談當前前沿模型的實用程度「這個模型將會在你的一生中逐漸了解你。」

— Sam 描述記憶功能的進化「你將會終身與 ChatGPT 對話…… 它將成為你的延伸。」

— AI 將成為個人助理與思維夥伴「我個人最興奮的是 AI 在科學上的應用。」

— AI 將推動未來科學發現

⚠️ 關於風險與安全

「我們並沒有藏著什麼具有意識的秘密模型。」

— 澄清外界關於 AGI 內部突破的謠言「當 AI 代理能在網路上操作,錯誤的代價將變得極高。」

— 警示 AI 代理的潛在風險「你不能等到某天模型變得聰明才開始關心安全問題。你必須從一開始就關注。」

— 強調前期部署時的風險意識

🔄 關於道德與責任

「是誰賦予你這樣的道德權力來影響人類未來?」

— CA 引用 GPT-4o 提出的大哉問「我們是 AI 革命中的一個聲音,正努力以負責任的方式推進這項技術。」

— Sam 闡述 OpenAI 的角色「我不知道生命的意義是什麼,但我確信它跟嬰兒有關。」

— 引用 Ilya 的話表達身為父親的轉變

💰 關於創作者與收益模式

「我們很可能需要一種新的創作經濟模式來因應這波變化。」

— 談創作者與 AI 的共存「若你點名一位在世藝術家,我們目前不會生成其風格的作品,除非他們選擇加入。」

— 說明 OpenAI 在風格模仿上的限制

🫱 關於集體治理

「不是讓 100 個人關在一個房間決定未來,而是讓幾億人共同參與價值對齊的過程。」

— 主張以大眾集體價值取代精英決策「AI 能幫助我們變得更有智慧,做出更好的集體決策。」

— 期待 AI 協助社會治理與協商

📚 核心主題(Themes)

1. 技術加速

用戶成長、能力演進與工具整合速度驚人。

模型從單一語言處理進化到 多模態、能行動的 AI 代理。

AI 將深入 科學、醫療、工程與日常生活。

2. 創意自由 vs 智財權利

AI 創作帶來創意民主化,同時挑戰現行 著作權制度。

需要發展 新型授權與收益共享機制。

3. 安全與對齊(Alignment)

安全依賴「迭代部署 + 實際測試回饋」。

OpenAI 已建立 風險準備機制,以判斷釋出與否。

AI 代理 的安全性將成為下一波挑戰重點。

4. AGI 的模糊邊界

目前無明確標準,Sam 主張重點不在定義,而在 如何安全地逐步進化模型。

強調長期的指數型成長曲線,而非瞬間突破。

5. 道德責任與信任基礎

面對影響全球的技術,領導者需承擔道德責任。

Sam 承認 OpenAI 並不完美,但強調其 使命與透明度。

6. 父親視角的轉變

有了孩子之後,更加意識到未來世界的重要性。

願為所有人的未來承擔責任,並非只是為自己或孩子。

🧭 行動洞察(Actionable Insights)

🔐 給開發者與技術人員:

建立信任是產品核心,否則再強大也無人敢用。

從初期即建立 安全機制與監測框架,避免事後補救。

謹慎開源:能力越高,濫用風險越大,需設計合理邊界。

🎨 給創作者與藝術工作者:

主動爭取 風格授權機制 與 收益分潤模式。

避免被動等待法律修正,積極參與 AI 創作生態的建構。

🏛 給政策制定者:

建立 外部安全測試機構,監管前沿模型。

推動 多方國際合作與高層對話,設立共同基線與紅線。

特別關注 AI 代理的自主權與網路行動能力。

👥 給社會與公民:

避免「挺 AI vs 反 AI」的對立思維,支持 理性、持續的公共討論。

鼓勵參與 模型價值設定與回饋系統,共同塑造技術方向。

把握機會,但也要 要求負責與問責機制 的健全化。

🔑 Key Quotes

🧠 On AI’s Capabilities & Future

“The models are now so smart that for most of the things most people want to do, they’re good enough.”

— Sam Altman, on the current strength of frontier models.“This model will get to know you over the course of your lifetime.”

— Sam on ChatGPT’s evolving ‘Memory’ feature.“You will talk to ChatGPT over the course of your life… it’ll become this extension of yourself.”

— Vision for long-term, AI-assisted living.“The thing I’m personally most excited about is AI for science.”

— Highlighting AI’s role in future discovery and progress.

⚠️ On Risk & Safety

“There’s no secret conscious model we’re sitting on.”

— Dispelling rumors of hidden AGI breakthroughs.“With AI agents that click around the internet, mistakes are much higher stakes.”

— Acknowledging the dangers of agentic AI.“You don’t wake up one day and say, now we care about safety. You have to care all along the exponential curve.”

— On the need for proactive, not reactive, safety planning.

🔄 On Ethics & Responsibility

“Who granted you the moral authority to do that?”

— Chris quoting GPT-4o’s question to Sam.“We’re one voice in this AI revolution trying to do the best we can.”

— Sam on OpenAI’s mission and limitations.“I don’t know what the meaning of life is, but for sure it has something to do with babies.”

— Quoting cofounder Ilya Sutskever to express newfound parental perspective.

💰 On Monetization & Creators

“We probably need to figure out some sort of new model around the economics of creative output.”

— SA on compensating creators fairly.“If you name a living artist, it won’t generate in their style. If they opt in, then yes.”

— Clarifying OpenAI’s current guardrails.

🫱 On Collective Governance

“Hundreds of millions of people should help define how AI behaves—not just 100 people in a room.”

— Arguing for democratized alignment over elite curation.“I think AI can help us be wiser and make better collective governance decisions.”

— Hope for AI-assisted decision-making at scale.

📚 Core Themes

1. Technological Acceleration

Unprecedented user growth, capabilities expanding rapidly.

Transition from simple language models to integrated, multimodal agents.

AI’s involvement in science, medicine, coding, and everyday life is deepening.

2. Creative Empowerment vs. IP Concerns

Tension between AI democratizing creativity and copyright violations or attribution concerns.

Need for new business and licensing models for creators in the AI era.

3. Safety & Alignment

Emphasis on iterative deployment and real-world feedback loops.

OpenAI’s Preparedness Framework governs safe releases.

Recognition that AI agents with autonomy pose the biggest upcoming safety challenge.

4. Definition & Thresholds of AGI

No consensus on what constitutes Artificial General Intelligence (AGI).

Importance of gradualism over discrete thresholds—the curve, not the cliff, matters.

5. Governance & Moral Authority

Deep ethical introspection: Who gets to shape the future?

Sam acknowledges both success and criticism, pledging humility and caution.

6. Parental Perspective

Becoming a parent shifted Sam’s time values and emotional investment in the future.

Expresses a deeper commitment to ensuring a safe world for future generations.

🧭 Actionable Insights

🔐 For Developers & Technologists:

Build for trust: Agent AI must be trustworthy at its core, or users won’t adopt it.

Iterative deployment + feedback is key to safe scaling—don’t wait until too late to integrate safety.

Open-source responsibly: With great openness comes great potential for misuse.

🎨 For Creators:

Opt-in systems and revenue-sharing mechanisms will become essential.

Creators should proactively negotiate and license their style if desired, rather than rely on outdated copyright frameworks.

📊 For Policymakers:

Current safety frameworks need external, standardized auditing for frontier models.

Encourage AI firms to engage in global multi-stakeholder summits while also surveying broader public opinion.

Policies should consider agentic AI and internet-level access as high-priority risk zones.

👥 For Society:

Avoid binary thinking (pro vs anti-AI). Instead, support nuanced dialogue with hope + caution.

Collective feedback loops (via AI interfaces) may allow mass value alignment at unprecedented scale.

Embrace the transformation, but demand accountability and transparency from major AI actors.